|

|

by Jack Ox and David Britton |

|

|

|

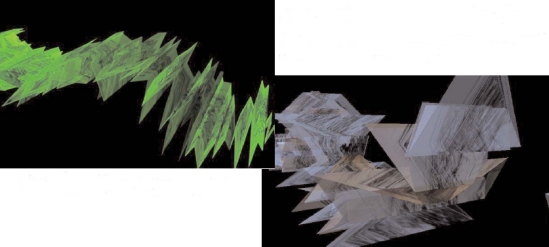

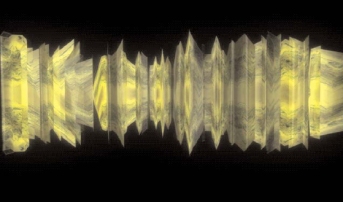

Since 1998, Jack Ox and David Britton have been developing an immersive music visualization system called the Virtual Color Organ with initial support from Ars Electronica. For over 25 years, the visualization of music and its historical context since the early 20th century has been an interest of Ox, but much of the work was done offline. It includes various systems of equivalence that were developed for working with music by Anton Bruckner, Igor Stravinsky, J.S. Bach, Claude Debussy, and the 41-minute sound poem (in a four movement Sonata) called the Ursonate by the German Constructivist and Dada artist, Kurt Schwitters. All of this inquiry was done in the analog world, but after experiencing installations in a CAVE 3D Immersive VR environment in '98 at Ars, Ox felt that the next logical progression in her work would be dynamic visualizations, which would be best realized through virtual spaces. In view of the technological framework developed by David and Ben Britton created for the Virtual Reality project Lascaux, an opportunity to build on historical experiments, both Ox's and others, was evident. One of the most logical extensions of a music visualization system like the VCO is the immersive manifestation of algorithmically based compositions, which was first implemented in Im Januar am Nil, a chamber orchestral arrangement by one of Ox' colleagues, the Calcutta-born composer, Clarence Barlow. [1] Certainly it can be said that systemic approaches to the realization of music surfaced throughout history, including works by Mozart and Cage. But to extend this historical lineage into that of visualization and take the idea of the 70s color organ into the context of immersive spaces, it seemed logical that the computer-generated composition would not only reconstruct itself in its new environment of the virtual landscape but would rise majestically over a metaphorical landscape of elements taken from the living rocks inspired by photographs taken in the deserts of California and Arizona. This desert landscape served as a metaphorical bridge between different land formations and families of instruments from a traditional orchestra with chorus, creating a visual 'organ stop' in the VCO. To build this translated landscape in the VCO, numerous pencil drawings were made from the images, representing metaphorical structures for many traditional instrument groups, such as woodwinds, brass, percussion, and the like. These were then scanned into the computer, and used as textures to cover polygons representing notes in the musical composition, as well as 3D models of landscapes that were created by Richard Rodriguez. As Barlow's computer-generated composition was algorithmically based on a 2D spiral, the VCO spatially represents the composition as a parabolic spiral, with each note possessing a texture map representing the metaphorical part of the landscape, instrument group, and timbre 'color.' As collaborative possibilities in immersive spaces developed further through technologies such as the Internet2 AccessGrid, the ability to apply the principles learned through the VCO to creating an environment for musical performance suggested further development, inspiring the GridJam. This installation, conceived of in 2000, proposed making an AccessGrid performance of 4 geographically separated musicians 'jamming' together over the AccessGrid. Even though the project's initial proposal was not funded, development of the project continued with the help of Alvin Curran in composing the structure for the improvised session on Internet2. Development: Visual Upon joining the team, Curran added 175 of his collected sounds, ranging from 2 to 54 seconds long. These sounds include animals, birds, effects like car crashes, bowling, coins being tossed, various horns, and snippets of instrumental music and vocals. There are also little pieces of spoken voice from John Cage, thereby hinting at the historical music practices by which the GridJam was informed. The sounds are to be loaded onto disklaviers; hybrid digital/analog pianos. In this way, the player accesses Alvin's collected sounds by hitting programmed keys. In creating a performance in a networked environment issues like latency (network time lags) certainly became an issue, but the use of myriad sound files as basic building blocks eliminates regular beats. Instead of trying to overcome this 'problem,' we posed that latency is an inherent aspect of networked, real-time performance. It will be used as an essential, celebrated element, metaphorically related to rests between notes and phrases in conventional Western music. The challenges of networked performance in our context will become compositional elements of chance and randomness, much like the quantization that occurs in human performance. In saying this, we suggest that the performers will be playing not only their parts but the Internet as well.

The basis for the selection of the texture maps for the models is that these always begin with the landscape associated with the sound's particular group. This basic image is modified in different ways with varying repetitions across the object, changes in the material's quality, e.g. transparent, metallic / shiny etc. All of these variations in the gradient patterns of timbrerelated color create a visual model of the aural structures. All of the examples shown here are from the group using the texture map associated with Strings. For further examples, models from two other groups are also available at the project website. To get an idea of how the process represents itself, it's important to have a mental image as to how the audience will experience the piece visually. Before the music starts, the audience, likely within a CAVE, digital planetarium, or other immersive space, will experience the base desert landscape of the piece, covered in the scans of the previously mentioned drawings. This entire world is in black and white, and is much more like a hand-drawn world in motion than a virtual reality space. Each musician's 'space,' comprising its own unique quadrant in the landscape, will start at a center point in the landscape, where the visualized sounds will begin. The development of the visualized piece over time will move out in concentric circles, as well as folding back and forth over itself. This will occur at increasing altitudes, and the folding will be done to allow for sufficient space, as well as to convey the metaphor of the sound waves that will be produced. In the currently proposed model, Curran would be changing the basic sounds through the application of real-time sound filters, which would be reflected by the movement of the objects linked to the sound changes.

Someone to watch over me

hora mortis finale drone

Development: Musical To develop the musical dimensions of the GridJam, we met with Curran at UCSD to further develop the project and discovered that he had expanded his musical ideas considerably. Each performance node would now have four sound sources; two of them incorporating acoustic and digital instrumentalists: a hybrid digital / analog piano as well as a traditional non-chordal instrument such as a saxophone or trombone, equipped with a pitch-to-MIDI converter. The third would be a 'laptopist,' or performer using a computer to process sound samples and sequences in real time, which would generate both an audio output and a digital MIDI stream. Lastly, an ambient microphone pickup would be placed in a location symbolically significant or acoustically characteristic of the performance venue. Together, these four sources at each node, as opposed to one per node as previously described, could produce a depth and level of interaction not conceived of originally. Combined with the metaphorical landscape of the virtual environment, the proposed configuration would produce quite remarkable experiences.

Coins spinning  To be a little more specific about the

performance, the digital MIDI information

generated by the first two

players are mapped to banks of

Curran's sounds, which would then

be processed in various ways, such

as filtering, vocoding, granular synthesis,

and so on. Following these

actions, each filter causes specific

movements of the 3D objects, which

could affect the object's position or

rotation, but are not refined at this

time. Overseeing all of this, a computer

program acting as a masterautomated

time controller will determine

the assignment of samples to

notes played and the processing

applied to the sample. The sample

processing mechanics should be an

agent-like program, operating

autonomously and adaptively in the

context of its various inputs. This

component of the piece is still in

development.

To be a little more specific about the

performance, the digital MIDI information

generated by the first two

players are mapped to banks of

Curran's sounds, which would then

be processed in various ways, such

as filtering, vocoding, granular synthesis,

and so on. Following these

actions, each filter causes specific

movements of the 3D objects, which

could affect the object's position or

rotation, but are not refined at this

time. Overseeing all of this, a computer

program acting as a masterautomated

time controller will determine

the assignment of samples to

notes played and the processing

applied to the sample. The sample

processing mechanics should be an

agent-like program, operating

autonomously and adaptively in the

context of its various inputs. This

component of the piece is still in

development.

From Britton's perspective as software developer for the VCO, the GridJam project will address the performative elements of the laptopists and the 'musical agents' as key conceptual elements, based on network latency. These conceptual elements are related to principles in complexity theory like that of the 'strange attractor,' in which multiple variables in an equation can interact with cyclical, but non-predictable patterns. The noteworthiness of these phenomena is sensitivity to stimuli that can provoke such a system's transition from one attractor state to another. Metaphorically, such complex state transformations relate to the sudden or subtle changes in tempo, as well as melodic or harmonic patterns that skilled improvisational musicians make as they play. In this way, the GridJam expands upon past experiments with chance and probability in musical composition, taking them to levels of complexity (pardon the pun) not before attained. AccessGrid meeting for the GridJam at San Diego Supercomputing On the last day of the UCSD sessions with Curran, a meeting was arranged on the AccessGrid node located at San Diego Supercomputing, bringing together people from GridJam partnering institutions. An excellent addition to the team was Pierre Boulanger from the University of Alberta, who described his 3D video avatar system, which uses live captured video of the musicians as they play. This video then can be placed as stereo image on billboards in the virtual world, creating live video avatars. Boulanger's technology is very exciting in the context of this performance, as it would allow us to actually place 3D musicians in the Color Organ desert environment. In our experiment on the AccessGrid much was learned, as there are still many challenges to overcome, such as the nature of interaction that the AccessGrid allows, in that it is primarily a video-based technology. Furthermore, the configuration of the video portals presents other obstacles, as they have typically been projection walls in a conference room with multiple open windows showing videos of other participants. AccessGrid spaces typically are not performative spaces, either from the performers' or the audience's viewpoint. It is still to be determined what impact the technological constraints imposed on GridJam by the Access Grid will have, which is a subject for continuing research.

Coins being tossed  One locale that might be a suitable

installation space for the project is a

digital dome theater, such as the

Lodestar Planetarium at UNM, or the

Pennington Planetarium at the

Louisiana Art and Science Museum.

With the increasing number of these

venues, the potential for projects like

GridJam as performances presents a

unique niche for artists, audiences,

and researchers alike. However, what

has not happened yet is the linkage

via Internet2, which is a high priority

for the coming years, and one of the

most important goals for us to

accomplish. Larry Smarr, director of

Cal(IT)2 has pointed out that artists

often help to move science forward

because they are always asking for

more than the current status quo. We

hope to follow that tradition with

GridJam.

One locale that might be a suitable

installation space for the project is a

digital dome theater, such as the

Lodestar Planetarium at UNM, or the

Pennington Planetarium at the

Louisiana Art and Science Museum.

With the increasing number of these

venues, the potential for projects like

GridJam as performances presents a

unique niche for artists, audiences,

and researchers alike. However, what

has not happened yet is the linkage

via Internet2, which is a high priority

for the coming years, and one of the

most important goals for us to

accomplish. Larry Smarr, director of

Cal(IT)2 has pointed out that artists

often help to move science forward

because they are always asking for

more than the current status quo. We

hope to follow that tradition with

GridJam.

Choral  For over 25 years, Jack Ox has been

exploring the rich aesthetic terrain of

translating musical scores into visual

metaphor. In partnering with colleagues

such as Britton, Curran,

Boulanger and others, what is being

created is a collaborative team that is

striving to create a unique form of

artistic expression drawing from rich

contemporary artistic traditions, science,

and technology. With successful

projects such as the Visual Color

Organ T, the potential for use of virtual

spaces as locales for audiovisual

experimentation is evident. However,

as GridJam is being developed for

rich performance possibilities via

Internet2 and spaces like digital planetaria,

it is our belief that our synthesis

of traditional and avant-garde

expressive forms creates a unique

experience that is a fertile bed for our

research.

For over 25 years, Jack Ox has been

exploring the rich aesthetic terrain of

translating musical scores into visual

metaphor. In partnering with colleagues

such as Britton, Curran,

Boulanger and others, what is being

created is a collaborative team that is

striving to create a unique form of

artistic expression drawing from rich

contemporary artistic traditions, science,

and technology. With successful

projects such as the Visual Color

Organ T, the potential for use of virtual

spaces as locales for audiovisual

experimentation is evident. However,

as GridJam is being developed for

rich performance possibilities via

Internet2 and spaces like digital planetaria,

it is our belief that our synthesis

of traditional and avant-garde

expressive forms creates a unique

experience that is a fertile bed for our

research.

The Virtual Color Organ™ has been supported by the National Center for Supercomputing Applications at the University of Illinois at Urbana-Champaign, Boston University, CAL (IT)2 and CRCA at the University of California at San Diego. SGI provided hardware support and EAI provided Sense8’s World Toolkit VR library as the program for developing the VCO. The Art and Technology Center and The High Performance Computing Center at the University of New Mexico have provided valuable help, along with The University Of Loughborough, Lutchi Research Center, UK, in the development of the methodology for modeling Curran’s sound files. |